Releasing the first Multimodal CAD Agent for Editable 3D Models

Try out our Multimodal CAD Agent in BETA here

The Challenge: Bridging the Gap Between Ideas and 3D Models

Generative AI has transformed how we create content in our daily lives. From writing and art to images and videos, we've seen an explosion of tools that turn ideas into reality with just a few prompts. Yet in the world of 3D design, the journey from concept to model remains stubbornly complex and time-consuming.

CADbuildr is on a mission to accelerate and democratize 3D design workflows. Generative AI is key in achieving this:

- Democratize by removing the barriers to entry for CAD: You shouldn't have to spend 1000+ hours to become proficient at a specific software to bring your ideas to life.

- Accelerate by predicting and assisting with designer workflows, removing repetitive tasks, and enabling entire assemblies and parts to be generated at once.

Whether you're a seasoned engineer or a hobbyist maker, translating ideas into CAD designs still requires extensive software knowledge and technical expertise. While AI has revolutionized creative workflows across industries, 3D design has remained largely unchanged – until now.

Introducing our chat

Today, we're excited to announce a significant milestone in that journey: CADbuildr's Multimodal CAD Agent. This AI-powered tool represents a fundamental shift in how we approach CAD design, allowing you to:

- Generate 3D models from natural language descriptions

- Convert reference images or sketches into editable CAD designs

- Provide feedback through screenshots and pencil drawings on the generated models

- Modify existing designs through simple text commands

- Export production-ready files for manufacturing

What sets our agent apart is its unique ability to create fully editable models. In the backend, it generates code built on top of our open-source CAD library: Foundation

Unlike other AI-generated content, these aren't just static outputs – they're proper CAD models that you can refine, modify, and integrate into your existing workflows.

How It Works

The Multimodal CAD Agent uses advanced AI to understand your intent across different input types:

- Text to CAD: Describe your design in natural language, and the agent creates a corresponding 3D model

- Image to CAD: Upload reference images or sketches to generate detailed 3D representations

- Interactive Refinement: Iterate on your design through natural conversation with the agent

- Editable Output: Access and modify the underlying CAD structure using CADbuildr's foundation library

You can also export to STL and STEP files as well as open the files to be edited directly in the Playground

What it is good at

Simple parts generation

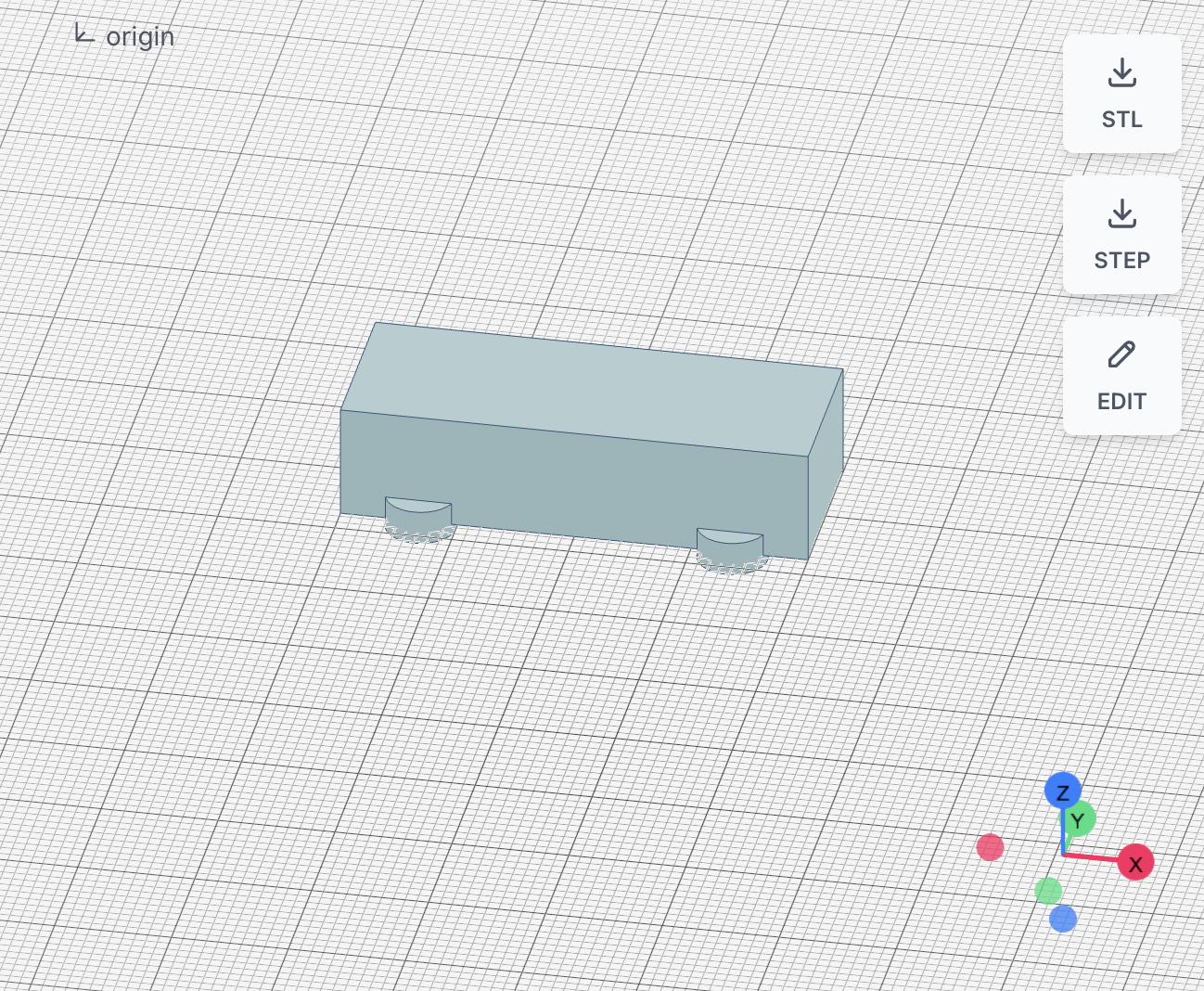

If you are a hobbyist or beginner at CAD, it can be challenging or time-consuming to generate parts. For simple parts, you can start with a basic text prompt. In this example, we asked the chat to generate a rectangular plate with rounde corners.

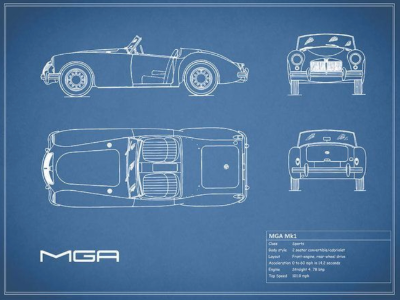

Simple parts from sketches/images

You can directly upload an image of the part you want to generate.

Modifying existing parts with a hand-drawn sketch

The agent can take an existing part and modify it based on a hand-drawn sketch. This enables quick iterations on designs without having to start from scratch each time.

Parametric assembly generation

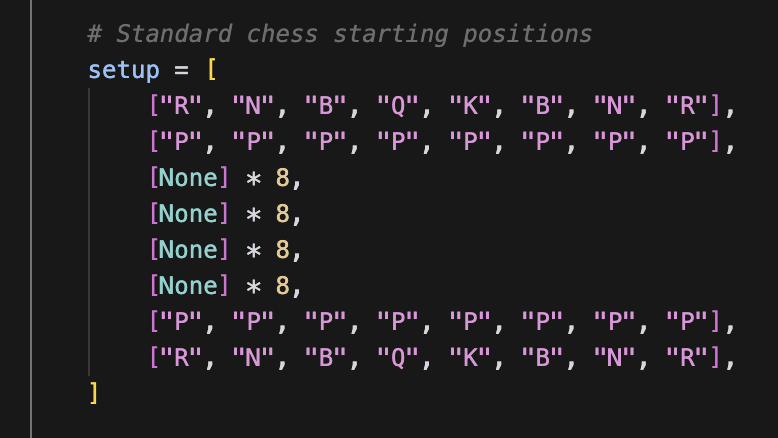

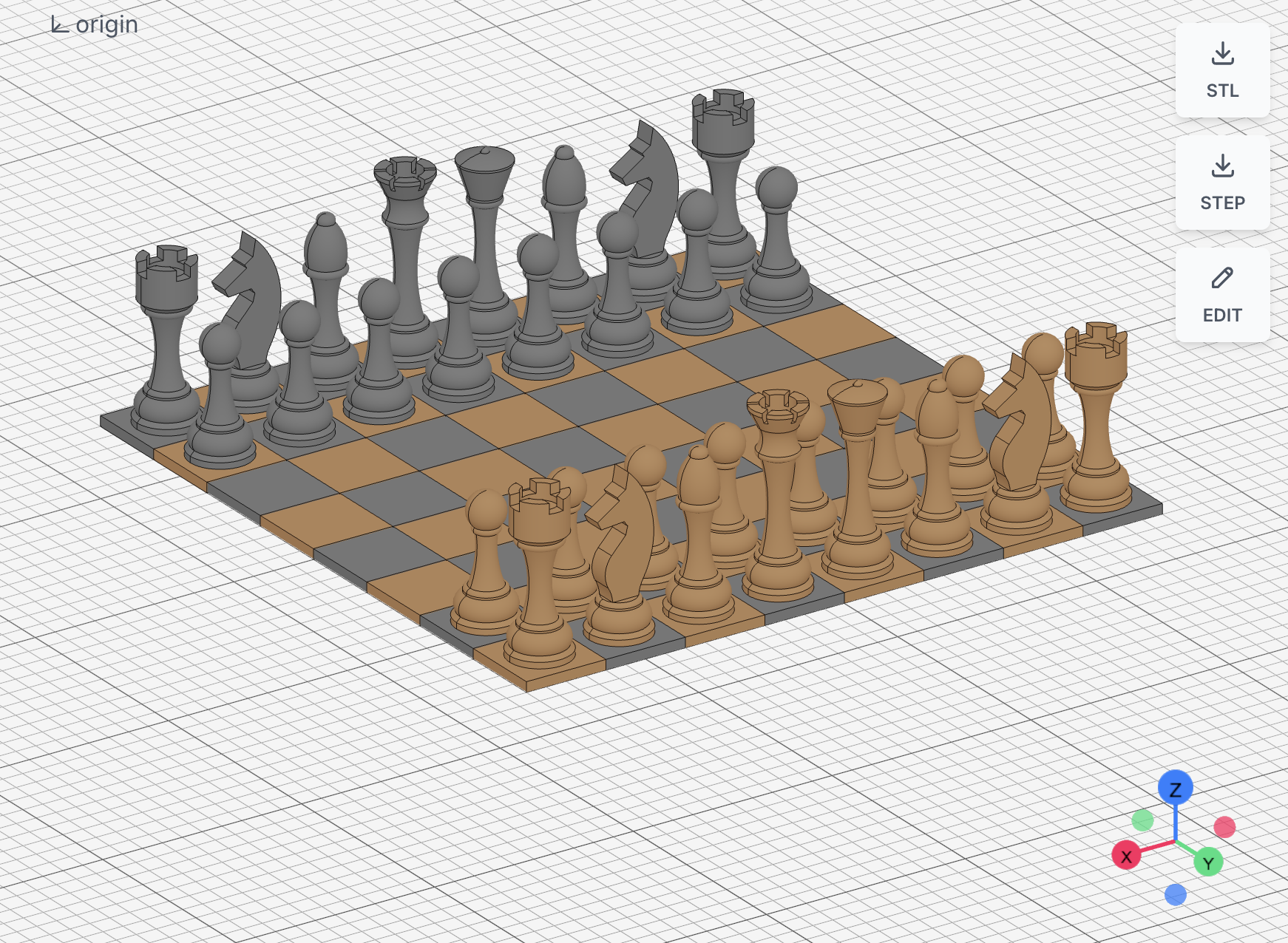

The Chat is quite good at creating parametric designs. For instance, in one example, we asked the chat to generate a chess board from individual parts. It developed its own way to represent the chess board layout. This would be almost impossible to do manually in CAD software.

Math-based parts generation

In this example, we asked the chat to generate a NACA airfoil. The chat generated a parametric airfoil that we can then modify. It used the actual formulas of the NACA airfoil to generate the geometry.

What it is not yet good at

Complex sketches or assemblies

The chat is not yet good at creating complex assemblies. This is an issue we are actively working on, but it often gets simple orientations wrong. It also struggles with creating complex sketches. In this example, when asked to generate this car from the reference image, the chat simplified the car to a box and put the wheels in the wrong direction.

It can also produce code that will not be valid for the CAD library, resulting in an error message. However, the generated code is often still useful as a starting point.

How it fits in the CADbuildr ecosystem

We are releasing the Multimodal CAD Agent as part of the rollout of the CADbuildr Hub, providing tools for editing, collaborating on, and sharing 3D models (More on that later).

Conclusion

We are excited to see how this tool will be used and how it will evolve. We are convinced this is a first step in applying generative AI to CAD workflows. If you have any feedback or suggestions, we would love to hear from you.